Intelligent Traffic Management – Applying Analytics on Internet of Things

With extension to the previous article “Revolutionizing the Agriculture Industry” this article talks more about how Internet of Things (IoT) and Analytics is going to revolutionize the Traffic Management in this modern world. Day-by-day roads are getting deluged with vehicles but the road infrastructure remains unchanged. Congestion in cities is cited as the major transportation problem around the globe. According to Texas A&M Transportation Institute, in 2011 traffic costs $121 billion due to travel delay with a loss of 2.9 billion gallons of fuel in USA alone.

The world is becoming more intelligent, where sensors in cars, roads are connected to internet and the devices communicate the data with each other. Using this intelligent, fleets can avoid accidents, predict car failure, preventive actions for maintenance can be taken, etc. Let us say hello to John.

Our John drives to office every day. His intelligent car gets data about various on-the-road events like accident, etc that took place ahead in his route and will tell guide him to take an alternative efficient route. With this intelligence John reaches his destination and his car informs him about the available parking slot! These events will not only enormously help John to save time and fuel but give him a tension free life. Are there any such services like this available already?

Meet Zenryoku Annai

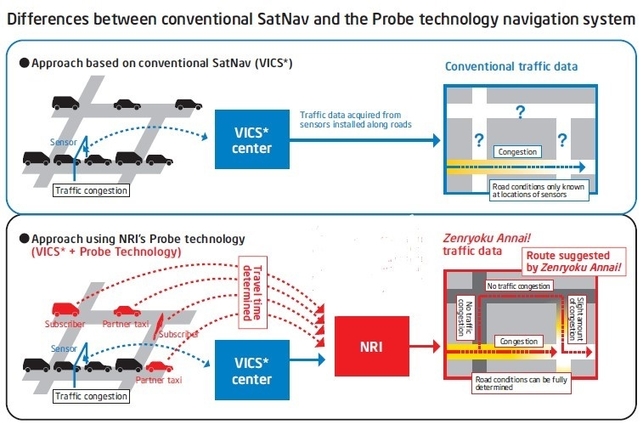

Zenryoku Annai is a service which is provided in Japan by Nomura Research Institute (NRI). Using this service, subscribers all over Japan can plot out the shortest travel routes, avoid traffic snarls and estimate what time they will arrive at their destinations. It compounds information from satellite navigation systems linked to sensors at fixed locations along roads with traffic data determined through statistical analysis on position and speed information from subscribers, moving vehicles and even pedestrians. Meanwhile, data from thousands of taxicabs is added to the mix. Using all this information, Zenryoku Annai analyzes road conditions and helps drivers plan routes more accurately and over a wider range than is possible with conventional GPS systems. Since more vehicles are being added to it over a period, they were using in-memory computing technology, which has improved the search speed by a factor of more than 1,800 over the department’s previous relational database management system, that is 360 million data points can be processed over just in 1 second.

Fig: 1.1

Fig: 1.1 In a conventional SatNav system, road conditions are known at locations only where sensors are installed, but in NRI’s probe technology roads conditions can be determined much more accurately with position and speed data delivered from in-car units and mobile phones, sensors. Thereby it will also suggest the best alternative route for the user. Though Zenryoku Annai is not fully skilled, since all the vehicles in roads are not connected to it. But very sooner once can expect everything that runs on road to be connected to the internet.

Driverless Cars: Another example!

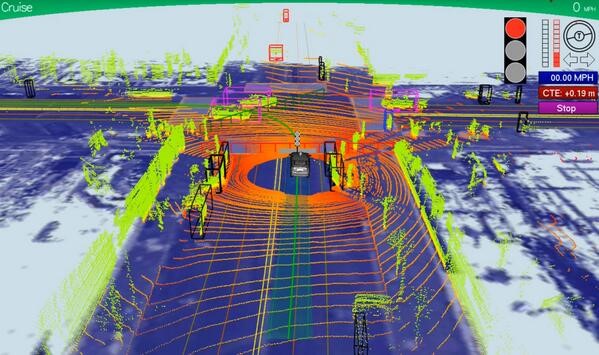

Driverless/Automation car is an ideal example, where IoT and Real time Analytics plays a crucial part. When a car goes down in a road it actually starts interacting with the signals, vehicles through various sensor points, accordingly it routes to the shortest distance itself by calculating the congestion & distance to reach the destination in a minimum duration. Pilot test run of Google cars (fig: 1.2) inferred that, it generates 1 gigabytes of data per minute from surroundings and these cars entirely dependents on IoT and analytics to take decisions.

Fig: 1.2

Conclusion

IoT with Analytics is in the very nascent stage and there are many barriers to restricts its growth. i.e. security of the data, privacy of the individuals, implementation problems and technology fragmentation. An average American commuter has spent 14 hours/year in 1982 but in 2010 it has surged to 34 hours/year, if this problem is unsolved, then it may even boost up to 40 hours/year. With the density of the population is exploding rapidly with the shortage of space, it is impossible to increase the capacity of roads but rather, a practically viable option is to use the power of data analytics on IoT.

Disclaimer:

Views expressed on this article are based solely on publicly available information. No representation or warranty, express or implied, is made as to the accuracy or completeness of any information contained herein. Aaum expressly disclaims any and all liability based, in whole or in part, on such information, any errors therein or omissions therefrom.

References

- https://www.techopedia.com/2/31434/trends/big-data/internet-of-things-iot-and-real-time-analytics-a-marriage-made-in-heaven

- http://www.sap.com/bin/sapcom/en_ca/downloadasset.2013-09-sep-23-16.drivers-avoid-traffic-jams-with-big-data-and-analytics-pdf.html

Does data science bring value to your organization? Hang on, what is this data science really? Why should we really care about? You got to really care because this one might change the way you run your business in the near future whether you like it or not. Just like Software/IT changed the world a few decades before! IT is omnipresent and those who didn’t care to change the wheels suddenly had to pay steep price to change their course. Today, thankfully the cost of IT adoption is very low since the industry is much matured to provide right solutions at low price. But the initial period was very crucial. People were extremely cautious about implementing, evaluated ROI, questioned why they should invest considering there were new systems, servers, recruitments, etc. Those were the times where clear ROI from IT cannot be calculated. IT was nascent. There were gross blunders like Y2K issues. But the world got changed and IT has touched almost all facets of life.

Does data science bring value to your organization? Hang on, what is this data science really? Why should we really care about? You got to really care because this one might change the way you run your business in the near future whether you like it or not. Just like Software/IT changed the world a few decades before! IT is omnipresent and those who didn’t care to change the wheels suddenly had to pay steep price to change their course. Today, thankfully the cost of IT adoption is very low since the industry is much matured to provide right solutions at low price. But the initial period was very crucial. People were extremely cautious about implementing, evaluated ROI, questioned why they should invest considering there were new systems, servers, recruitments, etc. Those were the times where clear ROI from IT cannot be calculated. IT was nascent. There were gross blunders like Y2K issues. But the world got changed and IT has touched almost all facets of life.

The Event opened with a featured keynote address by Mr.Rajesh Kumar, the Founder & Managing Director, AAUM Research & Analytics Pvt Ltd.Rajesh emphasized the need for data science practices in organizations and how insights from datascience is transforming the data-driven world.

The Event opened with a featured keynote address by Mr.Rajesh Kumar, the Founder & Managing Director, AAUM Research & Analytics Pvt Ltd.Rajesh emphasized the need for data science practices in organizations and how insights from datascience is transforming the data-driven world. Taking it forward, Ms. Parvathy Sarath, Director & data evangelist at AAUM, with extensive experience in finance, retail, social media, human resource and Government, opened a featured presentation on Data Science –learn, develop and deploy with her Lead Data Scientist Mrs. Praveena Sri. They explained the importance of R-tool, understanding the data through R-tool, Data visualization and Predictive analysis and how beneficial it could be for an organization in the longer run.

Taking it forward, Ms. Parvathy Sarath, Director & data evangelist at AAUM, with extensive experience in finance, retail, social media, human resource and Government, opened a featured presentation on Data Science –learn, develop and deploy with her Lead Data Scientist Mrs. Praveena Sri. They explained the importance of R-tool, understanding the data through R-tool, Data visualization and Predictive analysis and how beneficial it could be for an organization in the longer run. In the second session of Data Science-learn, develop and deploy analytics in your organization, Mr.Rajesh Kumar and Ms.Parvathy Sarath dealt with the topics like Logistic analysis, Multivariate analysis, Decision trees using R-tool.

In the second session of Data Science-learn, develop and deploy analytics in your organization, Mr.Rajesh Kumar and Ms.Parvathy Sarath dealt with the topics like Logistic analysis, Multivariate analysis, Decision trees using R-tool. Mr.Elayaraja

Mr.Elayaraja Mr.Bala Chandran from AAUM Research and Analytics, opened a featured presentation on Cloud for Data Science. He covered various topics like computing platforms, cloud services, Cloud Deployment tools.

Mr.Bala Chandran from AAUM Research and Analytics, opened a featured presentation on Cloud for Data Science. He covered various topics like computing platforms, cloud services, Cloud Deployment tools. The last session Do it Yourself, built on conversations and work done in the previous sessions of the day, helped the participant to test his understanding on Data Science and ensured inclusion and broad participation so that everyone gets benefited from the conference .

The last session Do it Yourself, built on conversations and work done in the previous sessions of the day, helped the participant to test his understanding on Data Science and ensured inclusion and broad participation so that everyone gets benefited from the conference .

To summarize the entire two day event, an Insightful interactive session was handled by Mr.Rajesh Kumar and Mr. Bala Subramanian to improve learning, interaction and engagement among the participants.

To summarize the entire two day event, an Insightful interactive session was handled by Mr.Rajesh Kumar and Mr. Bala Subramanian to improve learning, interaction and engagement among the participants. Finally, the time to the end of the conference!!

Finally, the time to the end of the conference!!

Is organized retail killing the kirana shops in India? Things were pretty different a few years ago. Many people declared end of the days for kirana Shops. But we have not seen any such major change but rather a few very interesting occurrences. The organized retail outlets did kick off in great way but did not kill the Mom and Pop shops. Infact, everybody grew in the booming Indian economy. Long story short… Mom and pop shops not only survived, they scaled their operations and expanded in more locations! Enter Walmart. What people mentioned about Walmart’s business In India, a few years ago was totally different from Walmart’s B2B operations. Walmart’s B2B business exclusively targeted Small/Medium business. Walmart B2B enabled Kirana store owners to become “Best Price Modern Wholesale stores” and provided benefits such as:

Is organized retail killing the kirana shops in India? Things were pretty different a few years ago. Many people declared end of the days for kirana Shops. But we have not seen any such major change but rather a few very interesting occurrences. The organized retail outlets did kick off in great way but did not kill the Mom and Pop shops. Infact, everybody grew in the booming Indian economy. Long story short… Mom and pop shops not only survived, they scaled their operations and expanded in more locations! Enter Walmart. What people mentioned about Walmart’s business In India, a few years ago was totally different from Walmart’s B2B operations. Walmart’s B2B business exclusively targeted Small/Medium business. Walmart B2B enabled Kirana store owners to become “Best Price Modern Wholesale stores” and provided benefits such as:

The various eTail topics discussed are

The various eTail topics discussed are

Recent Comments